Idaho State University researchers continue work on augmented reality device to aid arm rehabilitation

November 7, 2017

POCATELLO — Idaho State University mechanical engineering and physical therapy faculty and graduate students have teamed up to create a virtual reality system that will potentially assist with arm rehabilitation for people who have suffered from strokes.

“We have accomplished half of the work, which is creating the engineering systems to test this work and now we have to develop the protocol for using it for rehabilitation to see how well it works,” said Alba Perez-Gracia, ISU chair and associate professor of mechanical engineering, and a lead researcher on the project.

The ISU researchers, who are working on this collaborative project with Texas A&M and California State University, Fullerton, first mapped arm motions and digitalized them and then have created a virtual world where people wearing a portable virtual-reality device can use the system as a therapeutic intervention. The researchers will soon be testing the new tool with human subjects.

Subjects wear a virtual reality headset and use it to complete tasks created for the virtual world. The virtual reality system picks up the actual movements of their own arm and displays it as a cartoon figure within the virtual world. The subject may then participate in the virtual world task that include picking up balls and throwing them at a target or stacking cubes using their right or left hand. In addition, the system has been developed to reflect the image of the arm being used.

For example, if a person is using the right arm to complete the task, the virtual reality system reflects the image so that the cartoon arm actions being portrayed look as if it is the left arm performing the task. This reflected image of arm function has the potential to be used as a therapeutic intervention because previous research has shown that observing an action activates the same area of the brain as performing the action.

“It is called the mirror neuron system,” said Nancy Devine, associate dean of the ISU School of Rehabilitation and Communications Sciences, who is a co-researcher on the project. “When you observe body movements, the cells in the brain that would produce that movement are active even though that arm isn’t being used.”

She said if you just look at brain activity, in some areas of the brain you can’t distinguish an active movement from an observed movement.

“So, if you take someone who has had a stroke and can’t use one arm, you can take their arm that is still working and reflect it to the other arm by putting them in this engaging virtual environment and we can be providing an exercise that is effective in helping rehabilitate the damaged areas,” Devine added.

Although the work on this specific project ends at the end of the academic year, ISU’s work on this type of project may continue.

“We have created the portable virtual-reality device that the patient can wear, which projects the motion happening for the patients,” Perez-Gracia said. “We hope it will be a starting point for future projects on using virtual reality and robotics for helping in rehabilitation and training of human motion.”

This research has been taking place at the ISU Robotics Laboratory and the Bioengineering laboratory at the Engineering Research Complex. On this project, Perez-Gracia and Devine have been working with the third researcher of the team, Marco P. Schoen, professor of mechanical engineering, Omid Heidari, a doctoral student in mechanical engineering, master of science students A.J. Alriyadh, Asib Mahmud, Vahid Pourgharibshahi and John Roylance, and undergraduate students Dillan Hoy, Madhuri Aryal and Merat Rezai. Eydie Kendall, assistant professor of physical and occupational therapy, also collaborated on the project.

“We have very good equipment here that we can do experiments with and that is very appealing,” said Heidari, who said the laboratory has become his second home. “Instead of just writing code on computers and stuff, we are actually doing something here that is very practical and very interesting. We did the motion capture, the kinematic part, and now we are working on finishing the virtual reality part of the project. We are getting closer to having a good model of what we want.”

To begin the project, Alriyahd and Roylance took motion and electromyography (EMG) measurements for the arm.

“Basically, part of what we are doing is taking EMG signals, electric signals that can be measured on the surface of the skin, to identify how the patient wants to move, even if the patient cannot move,” Alriyadh said.

These students worked doing research projects in relation to augmented reality for the past year. Alriyadh worked on identifying the intended motion based on EMG signals. Roylance, who graduated last spring, was in charge of capturing the motion of the subjects.

Alriyadh‘s part of the project was to identify the intended motion based on reading of EMG signals. He studied the signals that are being sent from the brain to the motor points, the sources of the signal, to moving muscles. The issue patients face are their brains are sending the signals, but no motion is occurring.

“If I can find the relationship of that signal to the muscle, then I can identify the intended motion, even if the motion didn’t take place,” said Alriyadh. “So far, I can identify the signal, which is the progress I made and am writing my dissertation on. I can find the relationship between the signal and the motions.”

This will be part of the future uses of virtual reality device to assess and incorporate motion intention to the system. Heidari is in charge of the kinematic modeling of the motion and its visualization in the virtual reality device. He has been working on a model that patients can see inside of the virtual reality device. He has successfully created a model that simulates the motions of the arm and hand. It assists in identifying where joints are and what joints can be replaced for faster calculations.

One of Pourgharibshahi and Heidari’s tasks is to collect data from subjects with markers on their different bones and joints. These subjects do basic movements such as opening a door, drinking or pointing. The marker tracks the paths of motion, and is then mathematically analyzed. This process is known as motion capture data.

“We get data from these motions, then find a mechanism that can do the same things,” Heidari said. “Robots can do these same motions very closely, not exact, but very close, to what the healthy [human] motion was.”

“Once we know the motion and all of joints of the body we need to look at how the angles are related,” said Perez-Gracia. “We want to do this in real time, which means it has to be fast. Many joints move together. We need to identify which ones do and we want them to move in a human manner.”

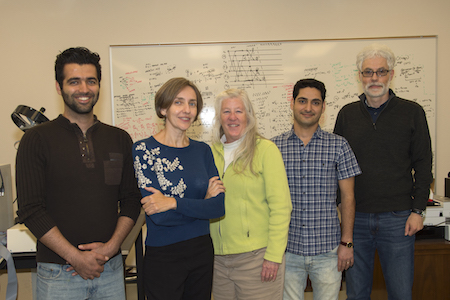

Photo information:

Middle photo – From left, ISU graduate student Omid Heidari, mechanical engineering Chair Alba Perez-Gracia, Associate Dean of the ISU School of Rehabilitation and Communications Sciences Nancy Devine, graduate student Vahid Pourgharibshahi, and mechanical engineering Professor Marco Schoen.

Bottom photo – Vahid Pourgharibshahi, ISU grad student, working with taking images of arm motions.